- 核心技术

- 以原创技术体系为根基,SenseCore商汤AI大装置为核心基座,布局多领域、多方向前沿研究,

快速打通AI在各个垂直场景中的应用,向行业赋能。

CVPR 2021|有的放矢,用图像分割与像素投票找到预定义的地标点

视觉定位这一任务的目标是根据图像计算出相机的六自由度位姿,即三自由度的位置和三自由度的旋转。目前主流的视觉定位方法有两种,即基于 SfM 的视觉定位方法和基于场景坐标回归的方法。

虽然基于场景坐标回归的方法在小型静态场景中的视觉定位方面已经表现出良好的性能,但它仍然会回归出许多较差质量的场景坐标,这会给准确的相机位姿估计带来影响。为了解决这个问题,我们提出了一种新颖的视觉定位框架 VS-Net,并在多个公共数据集上进行了测试,性能优于之前的场景坐标回归方法和一些代表性的基于 SfM 的视觉定位方法。

VS-Net: Voting and Segmentation for Visual Localization

Zhaoyang Huang1,2* Han Zhou1* Yijin Li1 Bangbang Yang1 Yan Xu2 Xiaowei Zhou1 Hujun Bao1 Guofeng Zhang1† Hongsheng Li2,3

1State Key Lab of CAD&CG, Zhejiang University‡ 2CUHK-SenseTime Joint Laboratory, The Chinese University of Hong Kong 3School of CTS, Xidian University

Part 1 论文简介

虽然基于场景坐标回归的方法在小型静态场景中的视觉定位方面已经表现出良好的性能,但它仍然会回归出许多较差质量的场景坐标,这会给准确的相机位姿估计带来影响。为了解决这个问题,我们提出了一种新颖的视觉定位框架,该框架根据场景制定一系列可学习的特定场景地标,并通过这些地标在查询图像和 3D 地图之间建立 2D 到 3D 的对应关系。在地标生成阶段,目标场景的 3D 表面被均匀分割成小块,并将每个小块的中心视为场景特定的地标。为了鲁棒而准确地恢复特定场景的地标,我们提出了一种同时预测分割与像素投票的网络 VS-Net,通过使用该网络的分割分支将二维图像中的像素分割为不同的地标块,并使用像素投票分支估计每个块在二维图像内的地标位置。由于场景中的地标数量可能多达5千甚至更多,使用常用的交叉熵损失训练具有如此多类别的分割网络而言计算与显存成本过高。为此,我们进一步提出了一种新的基于原型的三元组损失函数与在线负样本挖掘策略,能够有效地监督训练具有大量标签的语义分割网络。总的来说,该工作的主要贡献如下:

提出通过场景定制化地标来进行视觉定位,并提出通过投票与分割(voting-by-segmentation)来定位图像中的场景地标,从而使得相机位姿估计能更精准鲁棒。

由于场景地标数目过大(即图像分割是标签数目过大),我们提出了基于原型的三元组损失(prototype-based triplet loss)来解决标签数量很大情况下的图像分割问题。据我们所知,我们是第一个解决标签数目很大情况下的图像分割问题。在640x480分辨率,5千个标签类别设置下的图像分割任务中,我们提出的损失只需要传统的交叉熵损失算力和显存消耗的约0.1%(26.7MFLOPS v.s. 36.9GFLOPS;3.08MB v.s. 5.7GB)。

Part 2 相关工作

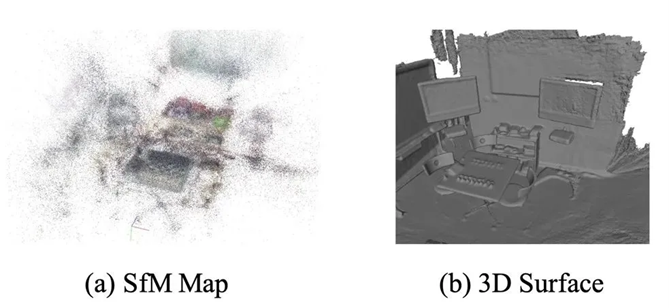

1. 基于SfM(Structure-from-Motion)的视觉定位方法

传统的视觉定位框架通过 SfM 技术构建地图,使用通用特征检测器和描述符。给定一个查询图像,他们提取相同的 2D 特征并通过描述符将它们匹配到地图中的 3D 特征。特征检测器和特征描述符的关系在这个框架中非常重要,因为它同时影响了地图质量和查询图像中 2D-3D 对应关系的匹配程度,这决定了定位的准确性。在基于 SfM 的视觉定位系统中,地图中的 3D 特征点是根据多个相对应的2D点通过三角测量法重建。这些地图中的 3D 特征点会非常凌乱(如图1(a)所示),因为一个实际场景中的 3D 点往往会被多个不同的3D 特征点来表达,这是由于建图时图像的视角变化较大而使得 2D 特征未能匹配成功,这种质量不高的地图会影响视觉定位效果。

图1 SfM构建地图与深度传感器构建地图比较

2. 基于场景坐标回归(Scene Coordinate Regression)的视觉定位方法

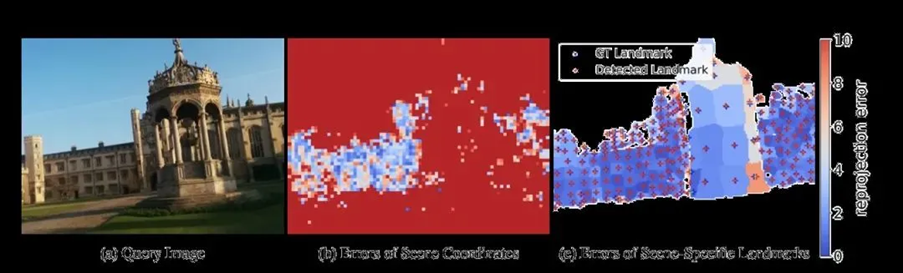

随着深度学习的发展,训练特定场景的神经网络对地图进行编码并使用它对该场景的图像进行定位定位成为另一种视觉定位方案。场景坐标回归的视觉定位方法通过训练一个神经网络来预测图像每个像素的场景坐标来构建 2D-3D 对应关系,然后使用经典的 RANSAC-PnP 方法来计算相机位姿。该方案能够使用没有特征数据库但是更加准确的三维地图(如图1(b)是一个使用深度传感器重建的稠密地图),并在中小型场景中取得了优异效果。然而通过该方法构建的 2D-3D 对应关系仍然不够准确且外点比例较高(如图2(b)所示)。与之相比,我们提出的 VS-Net 会得到稀疏但是更准确鲁棒的 2D-3D 对应(如图2(c)所示),这同时增加了定位的精度和鲁棒性。

图 2 2D-3D 对应关系的重投影误差比较

Part 3 方法描述

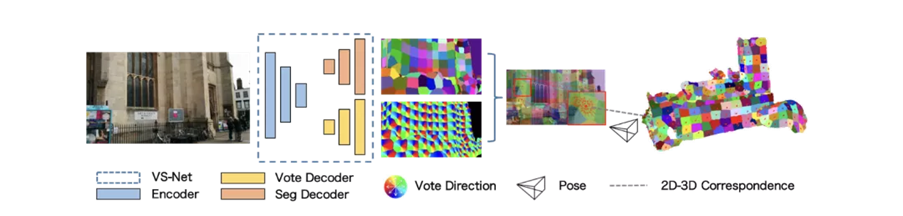

图 3 VS-Ne视觉定位框架

场景坐标回归方法比较适合小规模场景的视觉定位任务,一般为每个像素都建立输入查询图像和场景的 3D 表面点的 2D-3D 对应关系(即场景坐标)。然而,很大一部分像素预测的对应三维场景坐标有很高的重投影误差,这增加了定位失败的可能性并降低后续 RANSAC-PnP 算法的定位精度。针对这些问题,我们提出使用 VS-Net 来识别一系列场景定制化的地标(图 3)并建立它们与 3D 地图的对应关系以实现精确定位。场景定制化的地标是从场景的 3D 表面直接定义的一组稀疏的三维点。我们对场景的 3D 表面进行均匀分割,得到一组面片(patches),并挑选每个面片的几何中心作为场景定制化地标。给定不同视角的训练图像,我们可以投影这些生成的场景地标及其面片到图像平面以识别它们在图像中的对应像素。通过这种方式,我们可以为所有训练图像生成对应的地标信息。

在训练阶段,我们使用类似语义分割的像素级分割来预测属于每个在推理阶段,给定一个新的输入图像,我们从 VS-Net 获得地标分割图和地标位置投票图。然后可以基于地标分割和位置投票图建立 2D 到 3D 地标对应关系。与只能通过筛选场景坐标回归方法中 2D 到 3D 对应异常值的 RANSAC-PnP 算法不同,我们提出的方法中的地标如果没有足够高的投票置信度,就会被直接放弃,这就避免了从定位不准确的地标中估计相机的位置(图2)。此外,建立在场景坐标方法上的对应关系很容易受到不稳定预测的影响,而在我们的方法中,受轻微干扰的投票不会影响投票地标位置的准确性,因为它们会被面片内 RANSAC 计算交点算法过滤掉。像素对应的三维地标 ID。同时我们增加地标二维位置定位分支,通过输出指向地标二维投影的方向向量,使每个像素负责估计其相应地标的二维位置。

场景唯一地标生成:

n} ∈R3 被选为场景唯一地标进行定位。由于 Supervoxel 产生大小相似的块,生成的地标大多均匀地散布在三维表面上,这可以从不同的角度提供足够的地标,因此有利于定位鲁棒性。

给定训练图像和场景的相机姿势,三维场景特定的地标 {q1, . . . ,qn},以及它们相关的三维块可以被投影到二维图像上。对于每幅图像,我们可以生成一个地标分割图 S∈ZH×W 和一个地标位置投票图 d∈RH×W×2。对于基于块的地标分割,坐标 pi= (ui, vi)的像素被分配到由三维块的投影决定的地标标签(ID)。如果一个像素对应的区域没有被投影面覆盖,如天空或远处的物体,则给它分配一个背景标签0,表示这个像素对视觉定位无效。

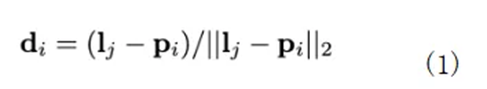

对于地标位置投票,我们首先通过根据相机内在矩阵 K 和相机姿态参数 C 投影三维地标来计算地标 qj 的投影二维位置 lj=P(qj, K, C)∈R2。属于地标 j 的的每个像素负责预测指向 j 的二维投影的二维方向向量 di∈R2,即

其中 di 是一个归一化的二维向量,表示地标 j 的方向。

在定义了真实地标分割图和真实方向投票图后,我们可以监督所提出的 VS-Net 预测这两个图。经过训练,VS-Net 可以预测查询图像的分割图和投票图,我们可以据此建立精确的二维到三维的对应关系,以实现稳健的视觉定位。

基于原型的在线学习三元监督投票分割网络:

传统的语义分割任务一般采用交叉熵损失来监督所有预测像素的完整分类

传统的语义分割任务一般采用交叉熵损失来监督所有预测像素的完整分类 One-Hot 向量。然而,我们的地标分割需要输出具有大量类别(地标)的分割图,以有效地识别每个场景唯一地标。常规语义分割中的逐像素交叉熵损失和常规的三元组损失在此时都不可用。

为了解决这个问题,我们提出了一种新的基于原型的三元组分割损失函数和在线负采样策略来监督有大量类的语义分割。它维护和更新一组可学习的类原型嵌入,每一个嵌入都代表一个语义类,即 Pj 表示第 j 个类的嵌入。直观地说,第 j 类的嵌入应该接近 Pj,并远离其他类的原型。我们提出的损失是基于具有在线负采样策略的三元组损失设计的。

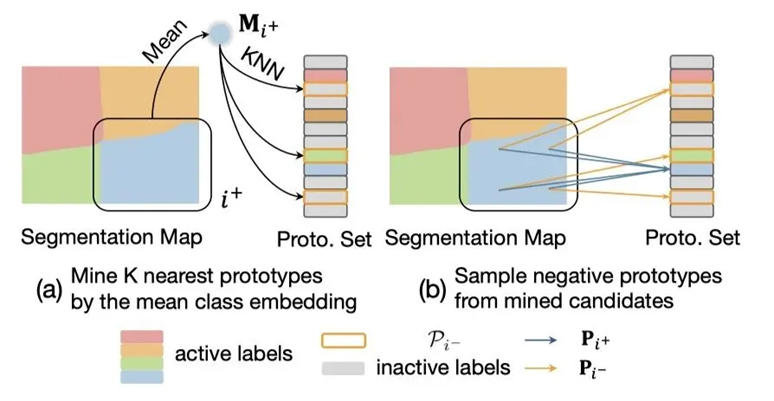

图 4 在线负采样策略

给定 VS-Net 的图像分割分支输出的逐像素特征图E和类的原型集 P,首先我们对各个特征和原型进行 L2 规范化,然后使用基于特征原型的三元组损失对其进行优化,以使每个像素的特征更接近它对应的类的特征原型而远离其他类的特征原型。对于正负采样,我们设计了两种采样策略,一种是把当前预测的特征图中所有具有相同 landmark id 的 embedding 每一维取均值作为我们的 anchor 特征向量,然后从 prototype set 中选择正负样本监督网络训练,也就是图4(a) 的采样方式。但这样选择的负样本可能不够充分,为了在不显著增加计算量的同时保证负样本的多样性,我们对每个像素计算k个最相近的负样本,也就是图4(b) 所绘制的采样方式。

其中的:

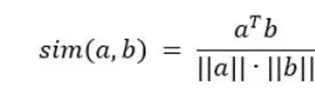

表示像素向量和类原型向量之间的余弦相似度,m 代表三元组损失的边际,P_(i+) 表示与像素 i 相对应的 ground-truth(正)类原型向量,P_(i-) 表示非对应的(负)类原型向量的采样。

对于每个像素,如何在上述基于原型的三元组损失中确定其负类原型向量 Pi- 对最终性能有至关重要的影响,随机抽样负类会使训练过于简单。给定输入图像,我们观察到活动地标的数量(即图像中属于地标的至少一个像素)是有限的。此外,属于同一地标块的像素在特征空间上彼此接近,并且会共享相似的负原型,因为它们具有相似的向量。因此,我们建议为每个活动地标挖掘代表性负类,每个像素随机采样来自挖掘类集的负类以形成代表三元组。

具体来说,给定一个有地标索引(类别)i+ 的像素 i,我们首先检索输入图像中与地标 i+ 相关的所有像素向量,并取其平均值以获得当图像中地标的平均类向量值 Mi+。然后使用平均类向量从原型嵌入集中检索 k 个最近邻负原型 Pi。可以将这样的 kNN 负原型认为是硬负样本。三元组损失使用像素 i 的从 kNN 负向原型集中均匀采样的单一负原型向量 Pi-(公式 (2) )。

基于方向向量的投票网络:

给定从上面介绍的分割解码器生成的分割图,输入图像中的每个像素要么被分配一个地标标签,要么是一个无效的标签,用于表示太远的物体或区域(例如:天空)。我们使用了另一个投票解码器,用于确定给定图像中地标的投影 2D 位置。解码器每个像素输出一个 2D 方向向量,指向其相应地标的 2D 位置。投票解码器使用以下损失监督,

其中1表示 L1 范数,其中的 和 分别表示像素 i 的 ground-truth 的投票方向和预测的投票方向。

训练与定位:

整体损失 Loverall 是地标分割损失和地标方向投票损失的组合,

其中 λ 对损失项的贡献进行加权。

在定位阶段,我们将地标分割图中预测具有相同地标标签的像素组合在一起,我们通过计算预测投票图中地标方向投票的交集来估计其对应的地标位置,称为投票-分割算法。

具体的,给定分割图,我们首先过滤掉像素隔宿小于阈值 Ts 的地标块,因为太小的地标其指向的 2D 地标位置通常也是不稳定的。使用向量求交模型从 RANSAC 计算出地标的 2D 位置的初始估计,该模型通过计算两个随机采样的定向投票的交叉并选择具有最多的假设来生成多个地标位置假设内部投票。然后,位置通过迭代 EM 算法进一步细化。在 E 步骤中,我们从当前周围圆形区域中收集地标 j 的内部投票向量。在 M 步中,我们采用了 Antonio 等人介绍的最小二乘法。根据圆形区域中的投票计算更新的地标位置。在迭代过程中,一个没有得到足够定向投票支持的投票地标,表明投票一致性低,将被舍弃。

Part 4 试验结果

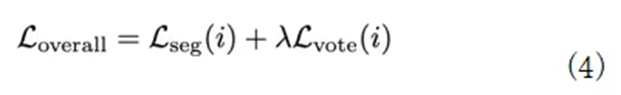

我们在 Microsoft 7-Scenes 和 Cambridge Landmarks 两个数据集上与基于 SfM 和基于场景坐标回归的视觉定位方法进行了比较。如表1所示,我们提出的基于定制化地标的视觉定位方案在所有场景中都取得了最好的精度,并在一些场景中(比如 GreatCourt 与 Office)显著优于其他方法。

表 1 视觉定位精度比较。我们通过相机平移误差与相机旋转误差的中位数来比较定位精度

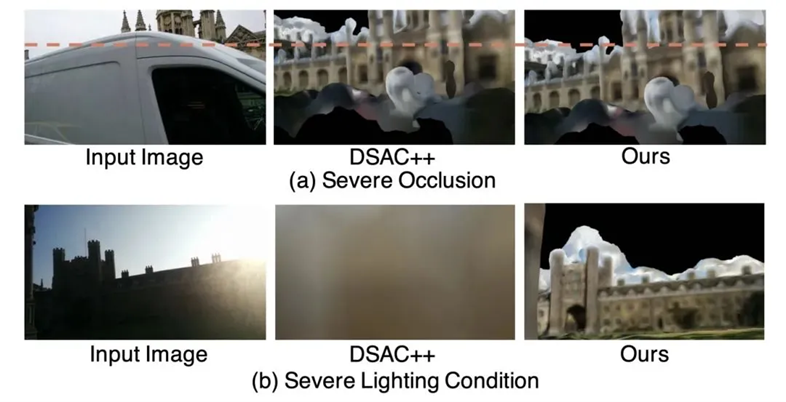

我们也对一些比较有挑战的查询图像进行了视觉定位结果的比较。对给定的查询图像,我们用定位系统计算出相机位姿之后,将重建的 3D 模型投影到对应的相机位姿中。通过对比查询图像与重投影生成的图像我们可以定性的比较视觉定位的结果。如图5所示,尽管有比较极端的动态物体遮挡 图5(a) 和恶劣的光照条件图5(b),我们仍然能比较好地估计相机位姿。

图 5对有挑战性图像的视觉定位

Reference:

[1]Sameer Agarwal, Yasutaka Furukawa, Noah Snavely, Ian Simon, Brian Curless, Steven M Seitz, and Richard Szeliski. Building rome in a day. Communications of the ACM, 54(10):105–112, 2011.

[2]Franklin Antonio. Faster line segment intersection. In Graphics Gems III (IBM Version), pages 199–202. Elsevier, 1992.

[3]Relja Arandjelovic, Petr Gronat, Akihiko Torii, Tomas Pajdla, and Josef Sivic. Netvlad: Cnn architecture for weakly supervised place recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5297–5307, 2016.

[4]Clemens Arth, Daniel Wagner, Manfred Klopschitz, Arnold Irschara, and Dieter Schmalstieg. Wide area localization on mobile phones. In 2009 8th ieee international symposium on mixed and augmented reality, pages 73–82. IEEE, 2009.

[5]Nicolas Aziere and Sinisa Todorovic. Ensemble deep manifold similarity learning using hard proxies. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7299–7307, 2019.

[6]Herbert Bay, Tinne Tuytelaars, and Luc Van Gool. SURF: Speeded up robust features. In Proceedings of the European conference on computer vision, pages 404–417. Springer, 2006.

[7]Eric Brachmann, Alexander Krull, Sebastian Nowozin, Jamie Shotton, Frank Michel, Stefan Gumhold, and Carsten Rother. Dsac-differentiable ransac for camera localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6684–6692, 2017.

[8]Eric Brachmann and Carsten Rother. Learning less is more6d camera localization via 3d surface regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4654–4662, 2018.

[9]Eric Brachmann and Carsten Rother. Expert sample consensus applied to camera re-localization. In Proceedings of the IEEE International Conference on Computer Vision, pages 7525–7534, 2019.

[10]Samarth Brahmbhatt, Jinwei Gu, Kihwan Kim, James Hays, and Jan Kautz. Geometry-aware learning of maps for camera localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2616– 2625, 2018.

[11]Ignas Budvytis, Marvin Teichmann, Tomas Vojir, and Roberto Cipolla. Large scale joint semantic re-localisation and scene understanding via globally unique instance coordinate regression. arXiv preprint arXiv:1909.10239, 2019.

[12]Federico Camposeco, Andrea Cohen, Marc Pollefeys, and Torsten Sattler. Hybrid scene compression for visual localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7653–7662, 2019.

[13]Liang-Chieh Chen, George Papandreou, Florian Schroff, and Hartwig Adam. Rethinking atrous convolution for semantic image segmentation. CoRR, abs/1706.05587, 2017.

[14]Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence, 40(4):834–848, 2017.

[15]Daniel DeTone, Tomasz Malisiewicz, and Andrew Rabinovich. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 224–236, 2018.

[16]Michael Donoser and Dieter Schmalstieg. Discriminative feature-to-point matching in image-based localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 516–523, 2014.

[17]Mihai Dusmanu, Ignacio Rocco, Tomas Pajdla, Marc Polle- ´ feys, Josef Sivic, Akihiko Torii, and Torsten Sattler. D2-net: A trainable CNN for joint description and detection of local features. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, June 16-20, 2019, pages 8092–8101, 2019.

[18]Martin A Fischler and Robert C Bolles. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24(6):381–395, 1981.

[19]Yixiao Ge, Haibo Wang, Feng Zhu, Rui Zhao, and Hongsheng Li. Self-supervising fine-grained region similarities for large-scale image localization. arXiv preprint arXiv:2006.03926, 2020.

[20]Yisheng He, Wei Sun, Haibin Huang, Jianran Liu, Haoqiang Fan, and Jian Sun. Pvn3d: A deep point-wise 3d keypoints voting network for 6dof pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11632–11641, 2020.

[21]Zhaoyang Huang, Yan Xu, Jianping Shi, Xiaowei Zhou, Hujun Bao, and Guofeng Zhang. Prior guided dropout for robust visual localization in dynamic environments. In Proceedings of the IEEE International Conference on Computer Vision, pages 2791–2800, 2019.

[22]Marco Imperoli and Alberto Pretto. Active detection and localization of textureless objects in cluttered environments. arXiv preprint arXiv:1603.07022, 2016.

[23]Alex Kendall and Roberto Cipolla. Geometric loss functions for camera pose regression with deep learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5974–5983, 2017.

[24]Alex Kendall, Matthew Grimes, and Roberto Cipolla. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE international conference on computer vision, pages 2938–2946, 2015.

[25]Xiaotian Li, Shuzhe Wang, Yi Zhao, Jakob Verbeek, and Juho Kannala. Hierarchical scene coordinate classification and regression for visual localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11983–11992, 2020.

[26]Yunpeng Li, Noah Snavely, and Daniel P Huttenlocher. Location recognition using prioritized feature matching. In European conference on computer vision, pages 791–804. Springer, 2010.

[27]Yutian Lin, Lingxi Xie, Yu Wu, Chenggang Yan, and Qi Tian. Unsupervised person re-identification via softened 6109 similarity learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3390–3399, 2020.

[28]Yuan Liu, Zehong Shen, Zhixuan Lin, Sida Peng, Hujun Bao, and Xiaowei Zhou. Gift: Learning transformation-invariant dense visual descriptors via group cnns. In Advances in Neural Information Processing Systems, pages 6990–7001, 2019.

[29]Jonathan Long, Evan Shelhamer, and Trevor Darrell. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3431–3440, 2015.

[30]David G Lowe. Distinctive image features from scaleinvariant keypoints. International journal of computer vision, 60(2):91–110, 2004.

[31]Jean-Michel Morel and Guoshen Yu. Asift: A new framework for fully affine invariant image comparison. SIAM journal on imaging sciences, 2(2):438–469, 2009.

[32]Yair Movshovitz-Attias, Alexander Toshev, Thomas K Leung, Sergey Ioffe, and Saurabh Singh. No fuss distance metric learning using proxies. In Proceedings of the IEEE International Conference on Computer Vision, pages 360–368, 2017.

[33]Richard A Newcombe, Shahram Izadi, Otmar Hilliges, David Molyneaux, David Kim, Andrew J Davison, Pushmeet Kohli, Jamie Shotton, Steve Hodges, and Andrew W Fitzgibbon. Kinectfusion: Real-time dense surface mapping and tracking. In ISMAR, volume 11, pages 127–136, 2011.

[34]Markus Oberweger, Mahdi Rad, and Vincent Lepetit. Making deep heatmaps robust to partial occlusions for 3d object pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), pages 119–134, 2018.

[35]Yuki Ono, Eduard Trulls, Pascal Fua, and Kwang Moo Yi. Lf-net: learning local features from images. In Advances in neural information processing systems, pages 6234–6244, 2018.

[36]Jeremie Papon, Alexey Abramov, Markus Schoeler, and Florentin Worg ¨ otter. Voxel cloud connectivity segmentation - ¨ supervoxels for point clouds. In Computer Vision and Pattern Recognition (CVPR), 2013 IEEE Conference on, Portland, Oregon, June 22-27 2013.

[37]Georgios Pavlakos, Xiaowei Zhou, Aaron Chan, Konstantinos G Derpanis, and Kostas Daniilidis. 6-dof object pose from semantic keypoints. In 2017 IEEE international conference on robotics and automation (ICRA), pages 2011–2018. IEEE, 2017.

[38]Sida Peng, Yuan Liu, Qixing Huang, Xiaowei Zhou, and Hujun Bao. Pvnet: Pixel-wise voting network for 6dof pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4561–4570, 2019.

[39]Qi Qian, Lei Shang, Baigui Sun, Juhua Hu, Hao Li, and Rong Jin. Softtriple loss: Deep metric learning without triplet sampling. In Proceedings of the IEEE International Conference on Computer Vision, pages 6450–6458, 2019.

[40]Tong Qin, Peiliang Li, and Shaojie Shen. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Transactions on Robotics, 34(4):1004–1020, 2018.

[41]Jerome Revaud, Cesar De Souza, Martin Humenberger, and Philippe Weinzaepfel. R2d2: Reliable and repeatable detector and descriptor. In Advances in Neural Information Processing Systems, pages 12405–12415, 2019.

[42]Olaf Ronneberger, Philipp Fischer, and Thomas Brox. Unet: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, pages 234–241. Springer, 2015.

[43]Ethan Rublee, Vincent Rabaud, Kurt Konolige, and Gary Bradski. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE international conference on Computer Vision (ICCV), pages 2564–2571. IEEE, 2011.

[44]Paul-Edouard Sarlin, Cesar Cadena, Roland Siegwart, and Marcin Dymczyk. From coarse to fine: Robust hierarchical localization at large scale. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 12716–12725, 2019.

[45]Torsten Sattler, Bastian Leibe, and Leif Kobbelt. Improving image-based localization by active correspondence search. In European conference on computer vision, pages 752–765. Springer, 2012.

[46]Johannes L Schonberger and Jan-Michael Frahm. Structurefrom-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4104–4113, 2016.

[47]Johannes Lutz Schonberger, Enliang Zheng, Marc Pollefeys, ¨ and Jan-Michael Frahm. Pixelwise view selection for unstructured multi-view stereo. In European Conference on Computer Vision (ECCV), 2016.

[48]Florian Schroff, Dmitry Kalenichenko, and James Philbin. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 815–823, 2015.

[49]Jamie Shotton, Ben Glocker, Christopher Zach, Shahram Izadi, Antonio Criminisi, and Andrew Fitzgibbon. Scene coordinate regression forests for camera relocalization in rgb-d images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2930–2937, 2013.

[50]Chen Song, Jiaru Song, and Qixing Huang. Hybridpose: 6d object pose estimation under hybrid representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 431–440, 2020.

[51]Julien Valentin, Matthias Nießner, Jamie Shotton, Andrew Fitzgibbon, Shahram Izadi, and Philip HS Torr. Exploiting uncertainty in regression forests for accurate camera relocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4400–4408, 2015.

[52]Bing Wang, Changhao Chen, Chris Xiaoxuan Lu, Peijun Zhao, Niki Trigoni, and Andrew Markham. Atloc: Attention guided camera localization. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 10393– 10401, 2020.

[53]Qianqian Wang, Xiaowei Zhou, Bharath Hariharan, and Noah Snavely. Learning feature descriptors using camera pose supervision. arXiv preprint arXiv:2004.13324, 2020.

[54]Philippe Weinzaepfel, Gabriela Csurka, Yohann Cabon, and Martin Humenberger. Visual localization by learning objects-of-interest dense match regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5634–5643, 2019.

[55]Changchang Wu et al. Visualsfm: A visual structure from motion system. 2011.

[56]Chao-Yuan Wu, R Manmatha, Alexander J Smola, and Philipp Krahenbuhl. Sampling matters in deep embedding learning. In Proceedings of the IEEE International Conference on Computer Vision, pages 2840–2848, 2017.

[57]Tong Xiao, Shuang Li, Bochao Wang, Liang Lin, and Xiaogang Wang. Joint detection and identification feature learning for person search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3415–3424, 2017.

[58]Yan Xu, Zhaoyang Huang, Kwan-Yee Lin, Xinge Zhu, Jianping Shi, Hujun Bao, Guofeng Zhang, and Hongsheng Li. Selfvoxelo: Self-supervised lidar odometry with voxel-based deep neural networks. Conference on Robot Learning, 2020.

[59]Fei Xue, Xin Wu, Shaojun Cai, and Junqiu Wang. Learning multi-view camera relocalization with graph neural networks. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 11372–11381. IEEE, 2020.

[60]Fisher Yu and Vladlen Koltun. Multi-scale context aggregation by dilated convolutions. In 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, 2016.

[61]Bernhard Zeisl, Torsten Sattler, and Marc Pollefeys. Camera pose voting for large-scale image-based localization. In Proceedings of the IEEE International Conference on Computer Vision, pages 2704–2712, 2015.

[62]Guofeng Zhang, Zilong Dong, Jiaya Jia, Tien-Tsin Wong, and Hujun Bao. Efficient non-consecutive feature tracking for structure-from-motion. In European Conference on Computer Vision, pages 422–435. Springer, 2010.

[63]Liang Zheng, Yujia Huang, Huchuan Lu, and Yi Yang. Poseinvariant embedding for deep person re-identification. IEEE Transactions on Image Processing, 28(9):4500–4509, 2019.

[64]Zilong Zhong, Zhong Qiu Lin, Rene Bidart, Xiaodan Hu, Ibrahim Ben Daya, Zhifeng Li, Wei-Shi Zheng, Jonathan Li, and Alexander Wong. Squeeze-and-attention networks for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13065–13074, 2020.

[65]Zhun Zhong, Liang Zheng, Zhiming Luo, Shaozi Li, and Yi Yang. Learning to adapt invariance in memory for person reidentification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

[66]Lei Zhou, Zixin Luo, Tianwei Shen, Jiahui Zhang, Mingmin Zhen, Yao Yao, Tian Fang, and Long Quan. Kfnet: Learning temporal camera relocalization using kalman filtering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4919–4928, 2020.

[67] Siyu Zhu, Tianwei Shen, Lei Zhou, Runze Zhang, Jinglu Wang, Tian Fang, and Long Quan. Parallel structure from motion from local increment to global averaging. arXiv preprint arXiv:1702.08601, 2017.

返回

返回