- News and Stories

SenseTime's Groundbreaking Research at ICCV 2023 Showcases China's Innovation Power

October 5, 2023 – The International Conference on Computer Vision (ICCV), renowned worldwide for its focus on artificial intelligence and computer vision, is taking place in Paris, France, from October 2nd to 6th, 2023. As one of the top three global computer vision conferences, alongside CVPR and ECCV, this year’s ICCV received a total of 8,068 submissions, of which 2,160 were accepted, resulting in a slightly higher acceptance rate of 26.8% compared to the previous ICCV in 2021, which stood at 25.9%.

SenseTime, a leading global artificial intelligence (AI) software company, and its joint laboratories were honored to have 49 papers selected for presentation at the conference. These papers covered a wide range of cutting-edge topics related to large models and generative AI, including text-to-image generation, 3D digital humans, autonomous driving, object detection, video segmentation, and more. Leveraging SenseTime's AI infrastructure, SenseCore, and the “SenseNova” Foundation Model Sets, the company achieved numerous valuable technological breakthroughs and research innovations in the fields of generative AI and large visual models.

Prof. Wang Xiaogang, Co-founder and Chief Scientist at SenseTime, said, “SenseTime's continuous dedication to AI infrastructure development, emphasis on industry-driven academic research, and cultivation of talent at all levels are the cornerstones supporting our continuous innovative achievements on the global academic stage. We are actively embracing the new research paradigm introduced by large models, continually enhancing our R&D system, and persistently integrating fundamental research with business development. SenseTime strives to contribute valuable technological achievements to the industry.”

SenseTime's Pioneering Research Unveils Promising Advances in Large Models and Generative AI

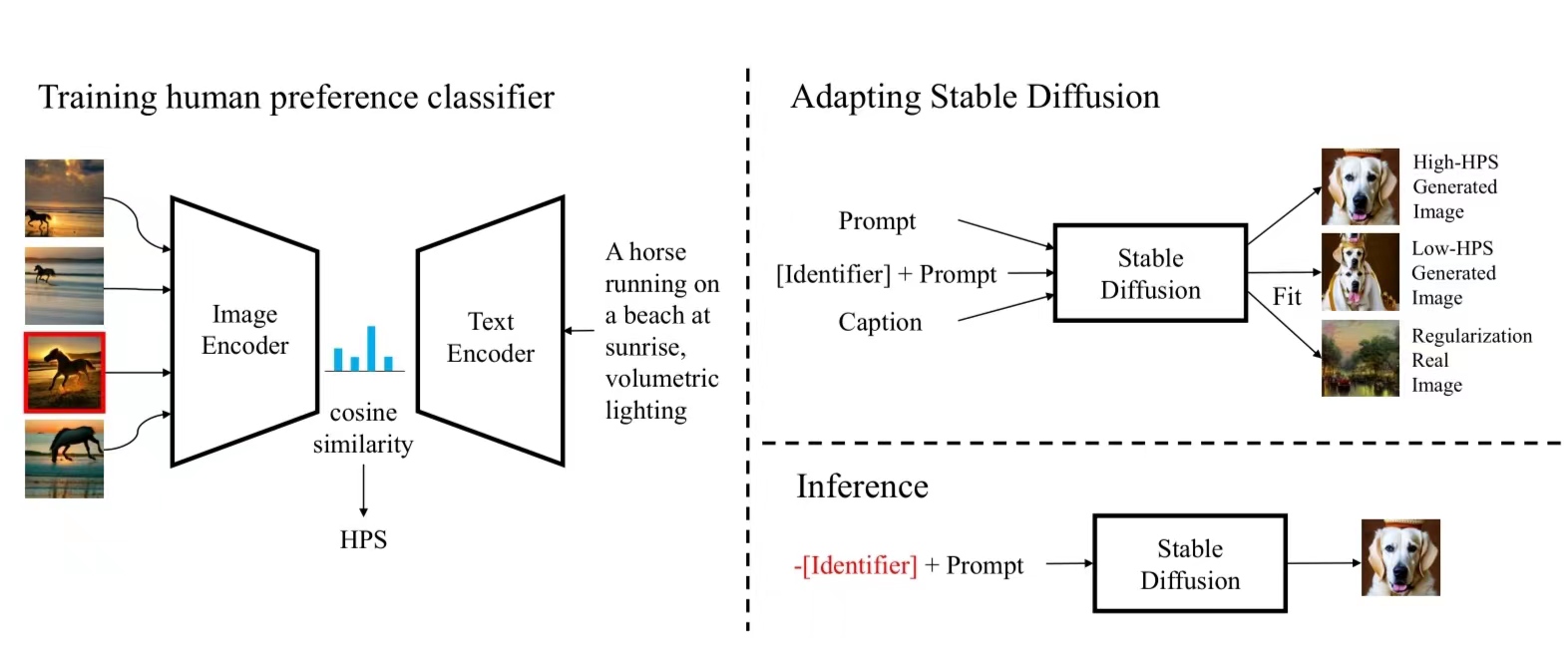

The significant global attention towards large models and generative AI has spurred the emergence of innovative research topics. SenseTime’s paper, titled “Human Preference Score: Better Aligning Text-to-Image Models with Human Preference”, proposes that Stable Diffusion can be adapted to better align with human preferences and intentions when guided by the proposed human preference classifier. Integration of human preference significantly enhances image quality in Stable Diffusion, in particular it is able to resolve challenging cases such as awkward combinations of facial expressions and limbs.

Incorporating human preferences into Stable Diffusion model training

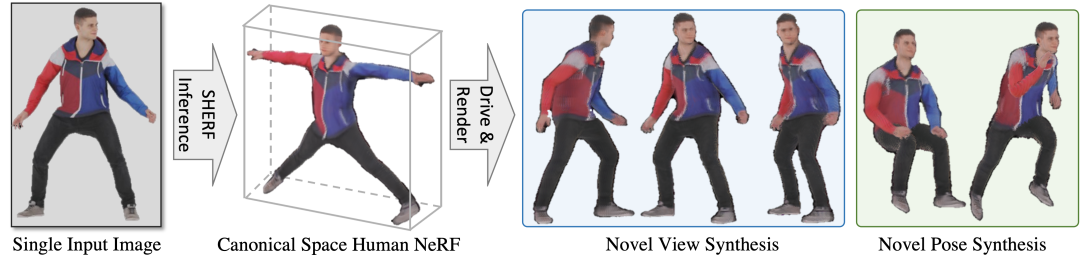

Furthermore, SenseTime's research team tackles the longstanding challenge of generating 3D digital humans in their paper, “SHERF: Generalizable Human NeRF from a Single Image”. In this paper, they propose SHERF, the first generalizable Human NeRF model for recovering animatable 3D humans from a single input image. With just one inference pass on a single image, SHERF reconstructs Human NeRF in the canonical space which can be driven and rendered for novel view and pose synthesis. This groundbreaking approach not only enables 3D human reconstruction and animation but also holds immense potential in streamlining the creative process involved in producing 3D digital humans.

SHERF learns a Generalizable Human NeRF to animate 3D humans from a single image

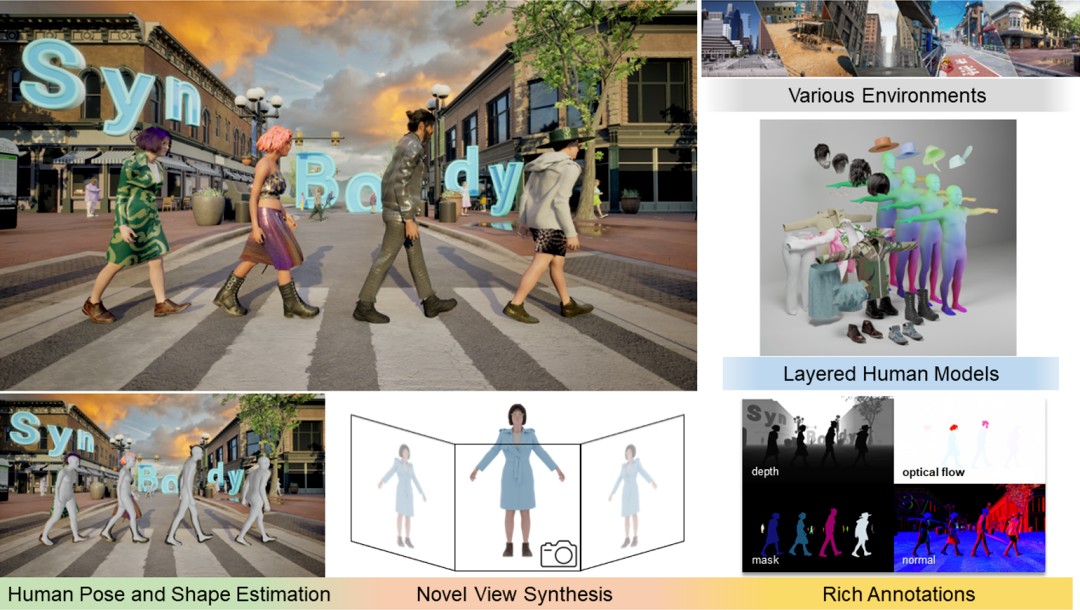

High-quality 3D human datasets serve as the foundation for numerous perception models, reconstruction models, and generative AI related to the human-centric research. SenseTime's research team has made significant strides in this area with their paper titled “SynBody: Synthetic Dataset with Layered Human Models for 3D Human Perception and Modeling”. The team introduced a new synthetic dataset SynBody, with a clothed parametric human model that can generate a diverse range of subjects. This dataset provides a vast collection of virtual human data, facilitating the training of 3D human perception and reconstruction models.

In addition, the team has released XRFeitoria, an open-source code repository serving as a synthetic data rendering toolkit. With its user-friendly Python API and CLI tools, XRFeitoria simplifies the creation of synthetic datasets.

SynBody is a large-scale synthetic datasets for human perception and modelling

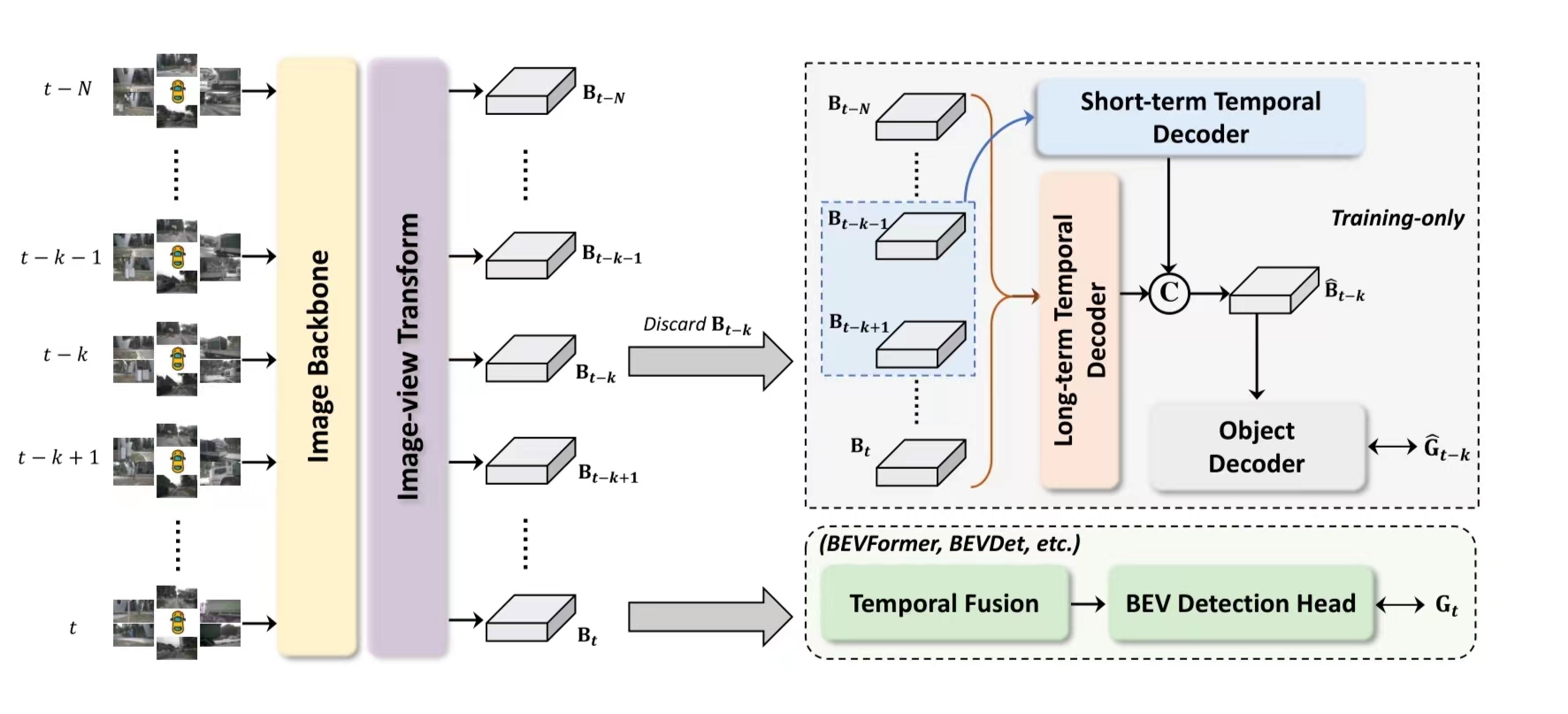

In the field of 3D object detection for autonomous driving applications, SenseTime's research team proposes a new paradigm, named Historical Object Prediction (HoP) for multi-view 3D detection in their paper titled “Temporal Enhanced Training of Multi-view 3D Object Detector via Historical Object Prediction”. This approach leverages temporal information to enhance the training of multi-view 3D object detection models more effectively.

HoP achieves 68.5% NDS and 62.4% mAP with ViTL on nuScenes test, outperforming all the 3D object detectors on the leaderboard. As a plug-and-play approach, HoP can be easily incorporated into state-of-the-art BEV detection frameworks, reshaping the paradigm of utilizing temporal information for 3D object detection.

Framework of HoP

SenseTime's outstanding achievements at this year's ICCV encompass a wide range of areas including object detection, video segmentation, 3D perception and reconstruction, semi-supervised learning, NeRF, and more. These significant technological breakthroughs underscore SenseTime's unwavering commitment to pushing the boundaries of AI research.

SenseTime's Commitment to Building an Open Ecosystem

SenseTime recognizes the importance of openness and collaboration in fostering a new ecosystem that includes enterprises, universities, and research institutes. SenseTime actively engages in academic exchanges, and competitions to facilitate the transformation of research into practical business applications, as well as to explore collaborative models between industry and academia.

Concurrently, SenseTime is committed to promoting AI infrastructure and open-source ecosystems, working alongside developers to drive the prosperous development of the AI community. Their open-source platform, OpenMMLab, is extremely popular on GitHub and has received over 87,000 stars since its launch in 2018. SenseTime’s open-source projects have expanded into various domains, including decision intelligence, large language models, extended reality, data platforms, high-performance training and inference frameworks, and AI intelligent agent frameworks. These projects provide the comprehensive support needed to make novel breakthroughs in academic research and industrial implementation.

Specifically, SenseTime's collaboration with the Joint Lab has resulted in the development of InternLM, a large language model (LLM) that has made a remarkable impact on the open-source community and industry. The latest InternLM-20B model offers advanced performance and convenient application, achieving comparable capabilities to the benchmark Llama2-70B model with less than one-third of the parameters.

Adhering to the principles of openness in development, SenseTime, along with its industry partners, eagerly embraces the new wave of technological revolution in large models. By enabling AI technology to unleash broader industrial value, SenseTime aims to contribute to the advancement of AI and its practical applications.

Return

Return