- Core Technology

- Based on the foundation of proprietary technologies and SenseCore AI infrastructure,

SenseTime has rapidly opened up AI application in multiple vertical scenarios, and is empowering various industries.

- 01Camera Perception

- 02LiDAR Perception

- 03Multi-Sensor Fusion

- 04Behavior Prediction of Vehicle / Pedestrian / Bicycle

- 05Path Planning, Decision Making and Control

- 06HD Map and Localization

- 07Adaptation and Deployment of Multi-chip Platform

- 08Camera Configuration

- 09Quality Management System

01 / 09

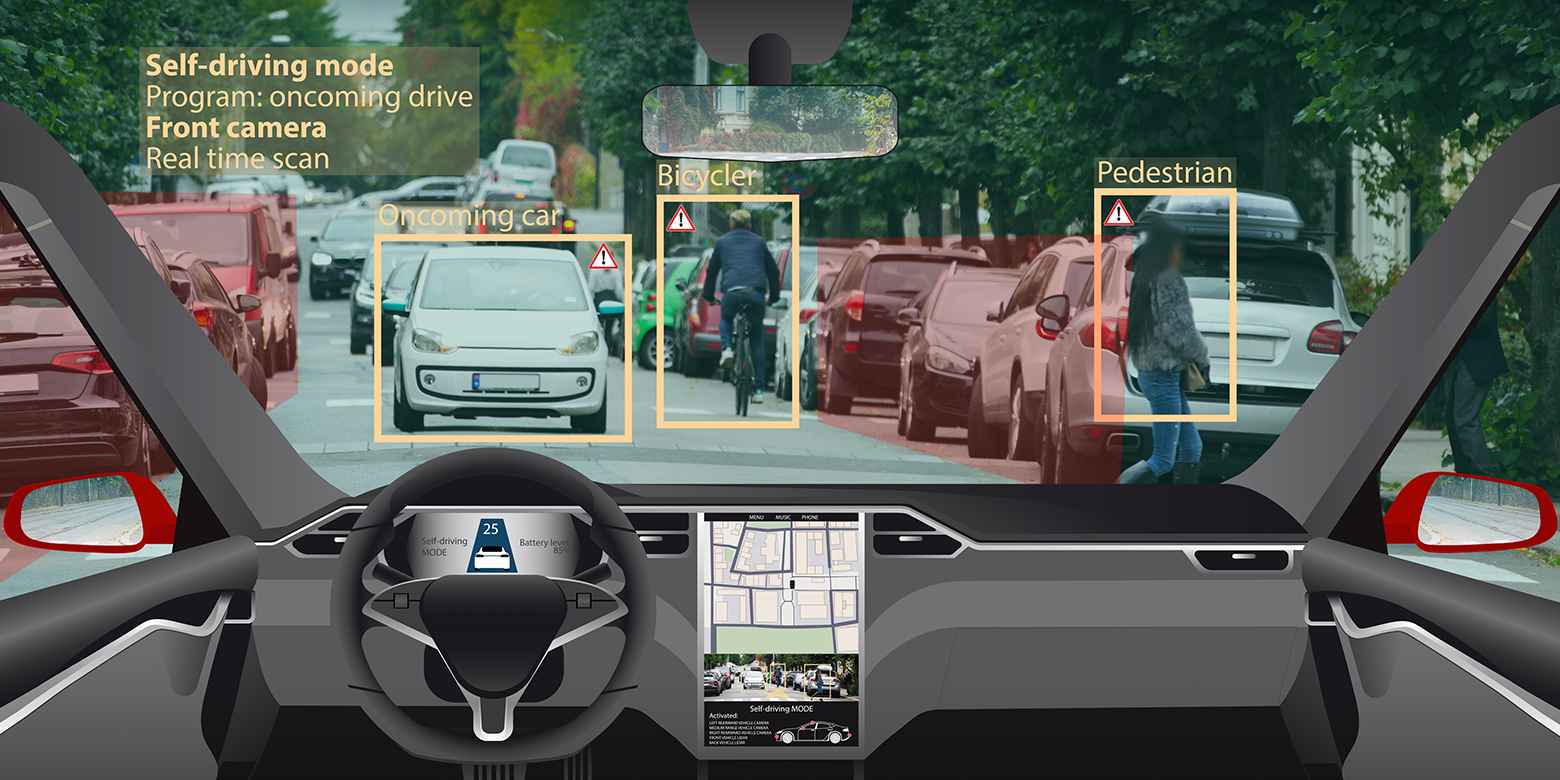

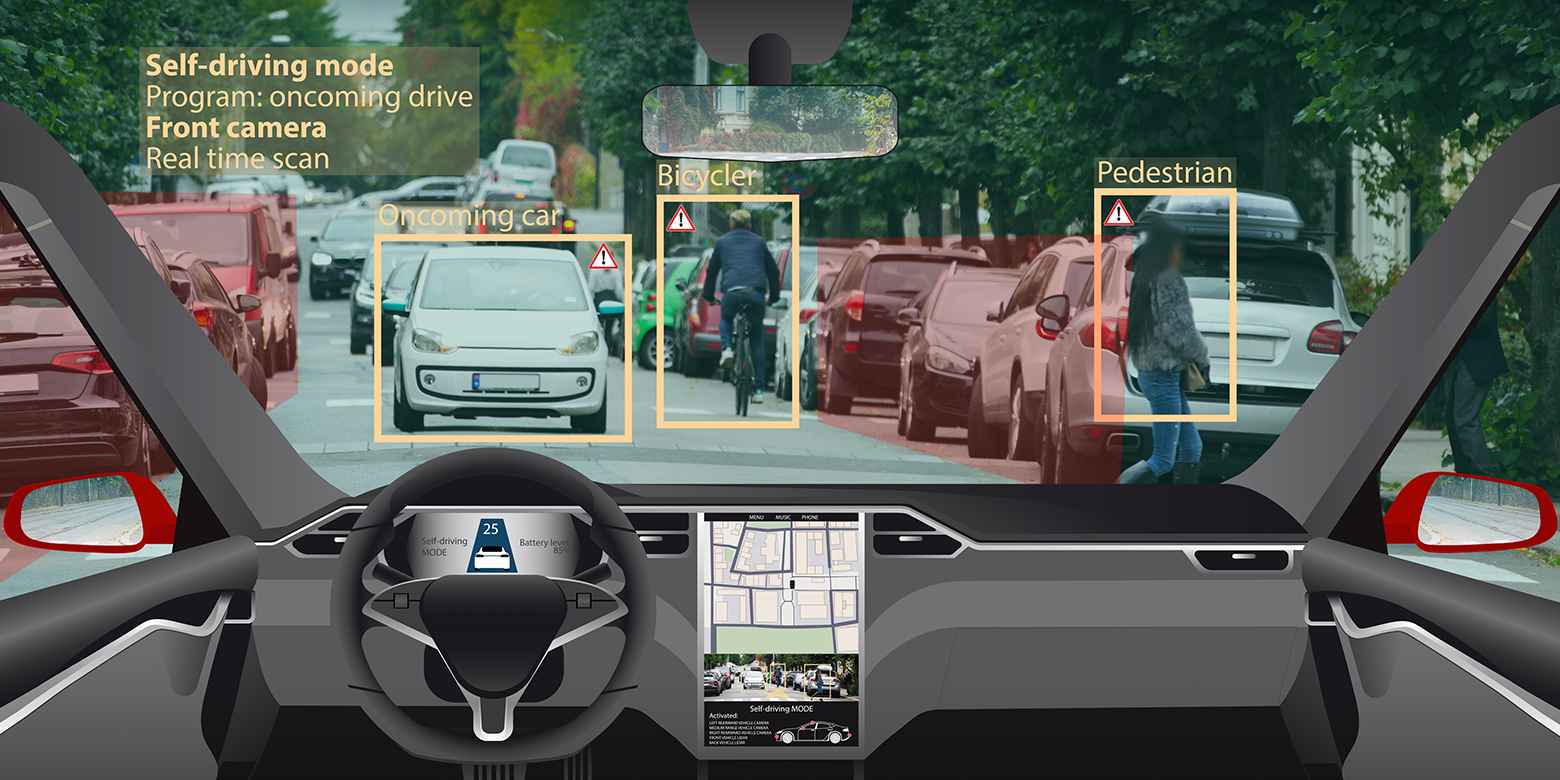

Camera Perception

With SenseTime’s experience in computer vision, the camera perception technology can detect lane lines, roadsides, drivable areas, vehicles, pedestrians, traffic signs, and traffic lights accurately through a monocular camera. Meanwhile, the high-performance lightweight target detection, tracking and recognition, were widely adopted in the Driver Monitoring System, Occupancy Monitoring System, and Facial Recognition Access System. The product can operate under complex lighting conditions such as dark light and backlight.

02 / 09

LiDAR Perception

Our LiDAR perception algorithm supports different types of LiDAR and application scenarios. It provides accurate detection and tracking of traffic participants and unknown objects under various scenarios such as autonomous driving and V2X.

03 / 09

Multi-Sensor Fusion

Our multi-sensor fusion system supports different combinations of different sensors to yield better results in lower latency, higher precision and fault tolerance.

04 / 09

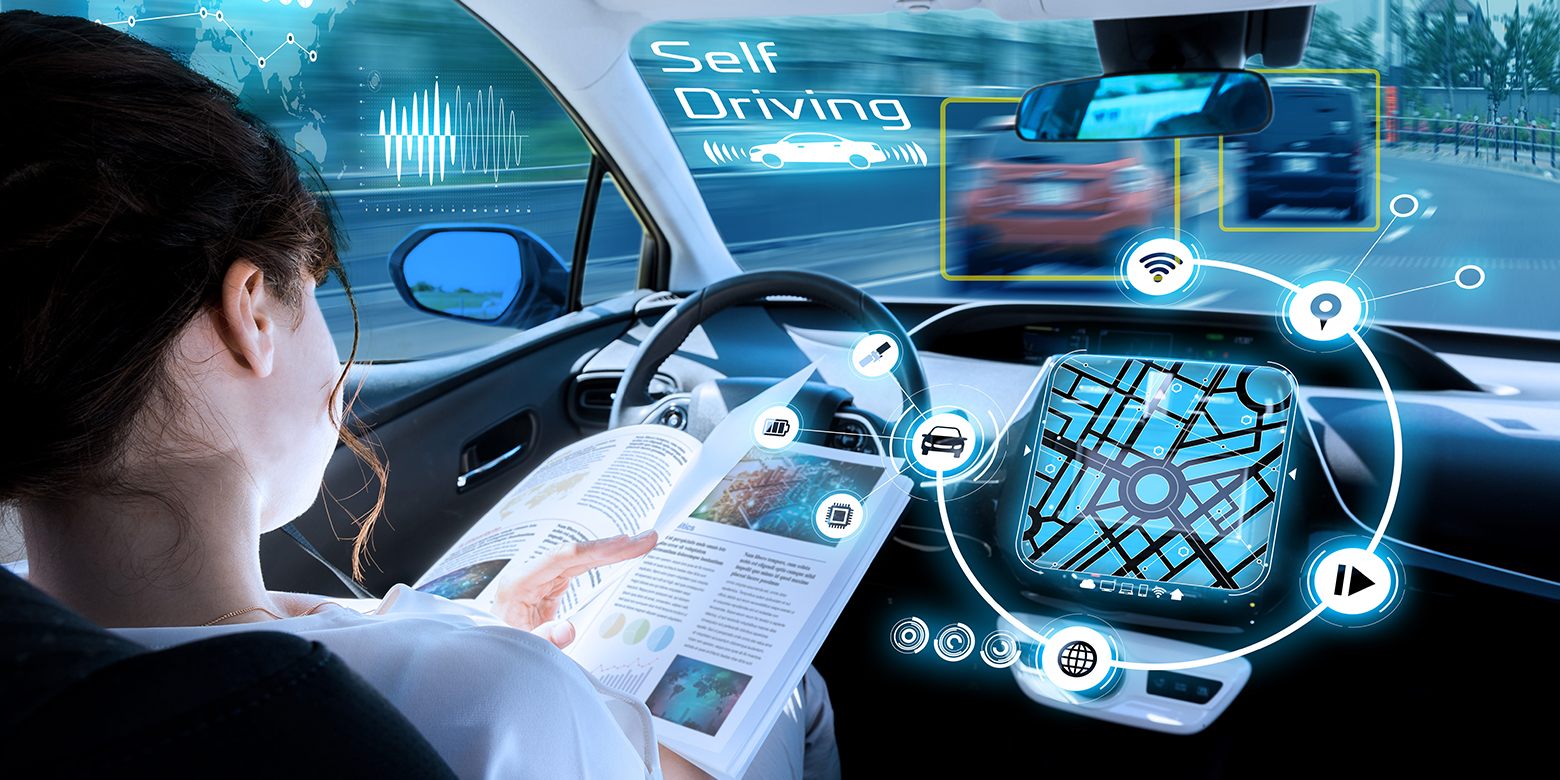

Behavior Prediction of Vehicle / Pedestrian / Bicycle

It can accurately predict behaviors of vehicles, pedestrians or bicycles in complex traffic scenes, which include the intention of turning, lane changing and road crossing, and the awareness of surrounding environments, as well as multiple potential trajectories. It provides reliable information for smarter planning and decision-making for autonomous driving.

05 / 09

Path Planning, Decision Making and Control

By integrating multiple modules, vehicle movements and surrounding environment, the technology enables a safe, smart and smooth decision-making and path planning process under complex driving scenarios, while providing accurate control of the vehicle.

06 / 09

HD Map and Localization

We can create high definition 3D maps at city scale with multi-sensor fusion technologies, which include point cloud map, localization map, semantic map, routing map. In addition, based on the HD maps, we provide high-precision localization in real time.

07 / 09

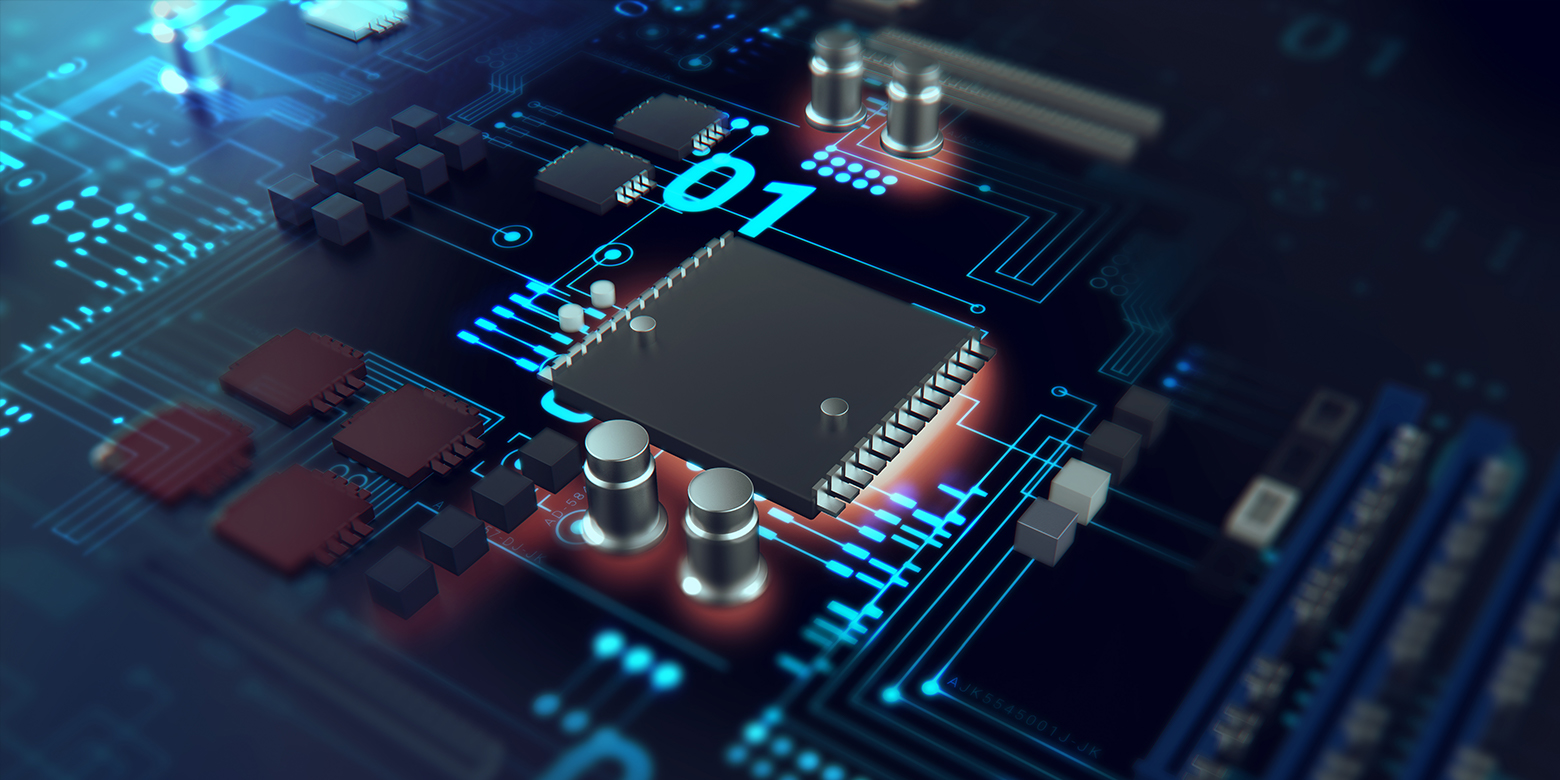

Adaptation and Deployment of Multi-chip Platform

Based on SenseParrots, our proprietary AI deep learning platform, the self-developed FPGA deployment toolchain and hardware accelerator being employed, our algorithm can be applied to a variety of mainstream SoCs with great flexibility and ease. This will promote the mass production of autonomous driving technology. Leveraging on self-developed HPC and edge computing deployment framework, the product utilizes the computing resource of automotive AI chips (NPU/DSP/GPU) targeting the mainstream ECUs in the automotive cabin industry to achieve low latency and less CPU/memory usage.

08 / 09

Camera Configuration

The product adapts to mainstream in-cabin cameras(IR/RGB/RGB-IR) in the market to form optimized quantitative indicators for image quality to ensure high product consistency on the system level.

09 / 09

Quality Management System

SenseTime established a comprehensive quality management system, and was granted the ISO26262 ASIL-B functional safety certification and ASPICE L2 certification in February 2020 and July 2020, respectively.

- Camera Perception

- LiDAR Perception

- Multi-Sensor Fusion

- Behavior Prediction of Vehicle / Pedestrian / Bicycle

- Path Planning, Decision Making and Control

- HD Map and Localization

- Adaptation and Deployment of Multi-chip Platform

- Camera Configuration

- Quality Management System

01 / 09

Camera Perception

With SenseTime’s experience in computer vision, the camera perception technology can detect lane lines, roadsides, drivable areas, vehicles, pedestrians, traffic signs, and traffic lights accurately through a monocular camera. Meanwhile, the high-performance lightweight target detection, tracking and recognition, were widely adopted in the Driver Monitoring System, Occupancy Monitoring System, and Facial Recognition Access System. The product can operate under complex lighting conditions such as dark light and backlight.

02 / 09

LiDAR Perception

Our LiDAR perception algorithm supports different types of LiDAR and application scenarios. It provides accurate detection and tracking of traffic participants and unknown objects under various scenarios such as autonomous driving and V2X.

03 / 09

Multi-Sensor Fusion

Our multi-sensor fusion system supports different combinations of different sensors to yield better results in lower latency, higher precision and fault tolerance.

04 / 09

Behavior Prediction of Vehicle / Pedestrian / Bicycle

It can accurately predict behaviors of vehicles, pedestrians or bicycles in complex traffic scenes, which include the intention of turning, lane changing and road crossing, and the awareness of surrounding environments, as well as multiple potential trajectories. It provides reliable information for smarter planning and decision-making for autonomous driving.

05 / 09

Path Planning, Decision Making and Control

By integrating multiple modules, vehicle movements and surrounding environment, the technology enables a safe, smart and smooth decision-making and path planning process under complex driving scenarios, while providing accurate control of the vehicle.

06 / 09

HD Map and Localization

We can create high definition 3D maps at city scale with multi-sensor fusion technologies, which include point cloud map, localization map, semantic map, routing map. In addition, based on the HD maps, we provide high-precision localization in real time.

07 / 09

Adaptation and Deployment of Multi-chip Platform

Based on SenseParrots, our proprietary AI deep learning platform, the self-developed FPGA deployment toolchain and hardware accelerator being employed, our algorithm can be applied to a variety of mainstream SoCs with great flexibility and ease. This will promote the mass production of autonomous driving technology. Leveraging on self-developed HPC and edge computing deployment framework, the product utilizes the computing resource of automotive AI chips (NPU/DSP/GPU) targeting the mainstream ECUs in the automotive cabin industry to achieve low latency and less CPU/memory usage.

08 / 09

Camera Configuration

The product adapts to mainstream in-cabin cameras(IR/RGB/RGB-IR) in the market to form optimized quantitative indicators for image quality to ensure high product consistency on the system level.

09 / 09

Quality Management System

SenseTime established a comprehensive quality management system, and was granted the ISO26262 ASIL-B functional safety certification and ASPICE L2 certification in February 2020 and July 2020, respectively.

Return

Return